Right-click the extractors node and select New | File.

DATAGRIP EXPORT TO CSV WINDOWS

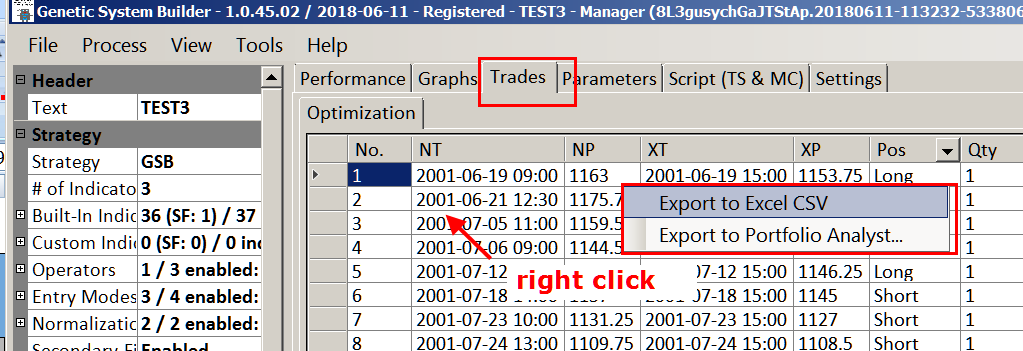

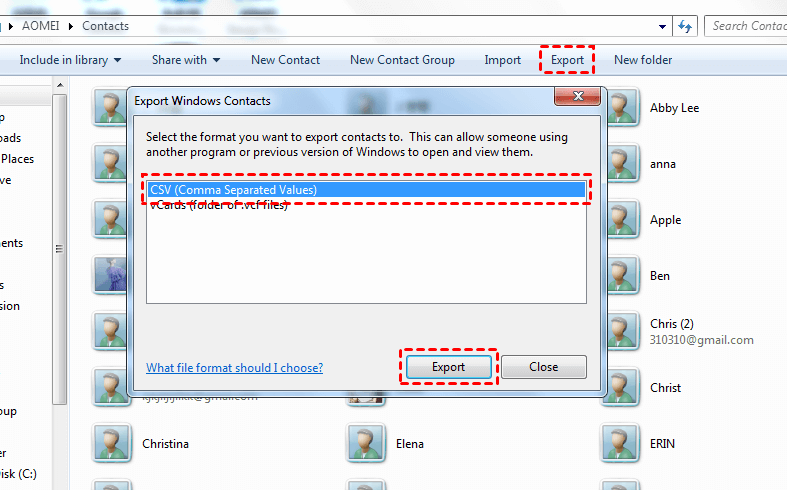

In the Files tool window ( View | Tool Windows | Files), navigate to Scratches and Consoles | Extensions | Database Tools and SQL | data | extractors. You can create your own extractor that you can write on Groovy or JavaScript. When the format is created, you can select it in the drop-down list near the Export Data icon ( ).įor more information about the CSV Formats dialog, refer to the reference.

Specify a name of a new format (for example, Confluence Wiki Markup).ĭefine settings of the format: set separators for rows and headers, define text for NULL values, specify quotation. In the CSV Formats dialog, click the Add Format icon. In its settings, you can set separators for rows and headers, define text for NULL values, specify quotation, create new extractors for formats with delimiter-separated values.įrom the list of data extractors, select Configure CSV Formats. You can extend the default functionality and create your own format that is based on CSV or any DSV format. Use them to create your own format that is based on CSV or any DSV format.Ĭonfigure an extractor for delimiter-separated values Create them using Groovy or Javascript and the provided API.Įxtractors for delimiter-separated values. LOCATION '/datasets/iris-dataset.Custom data extractors. CREATE TABLE IF NOT EXISTS iris_dataset( `sl` double, `sw` double, `pl` double, `pw` double ) To do so you need to execute Spark's saveAsTable() function.Īnother option is to directly create the tables from external files (such as parquet or CSV) from the external SQL tool.įor example to create a new table execute a CREATE TABLE. In both situations they need to be "registered" in the metastore.

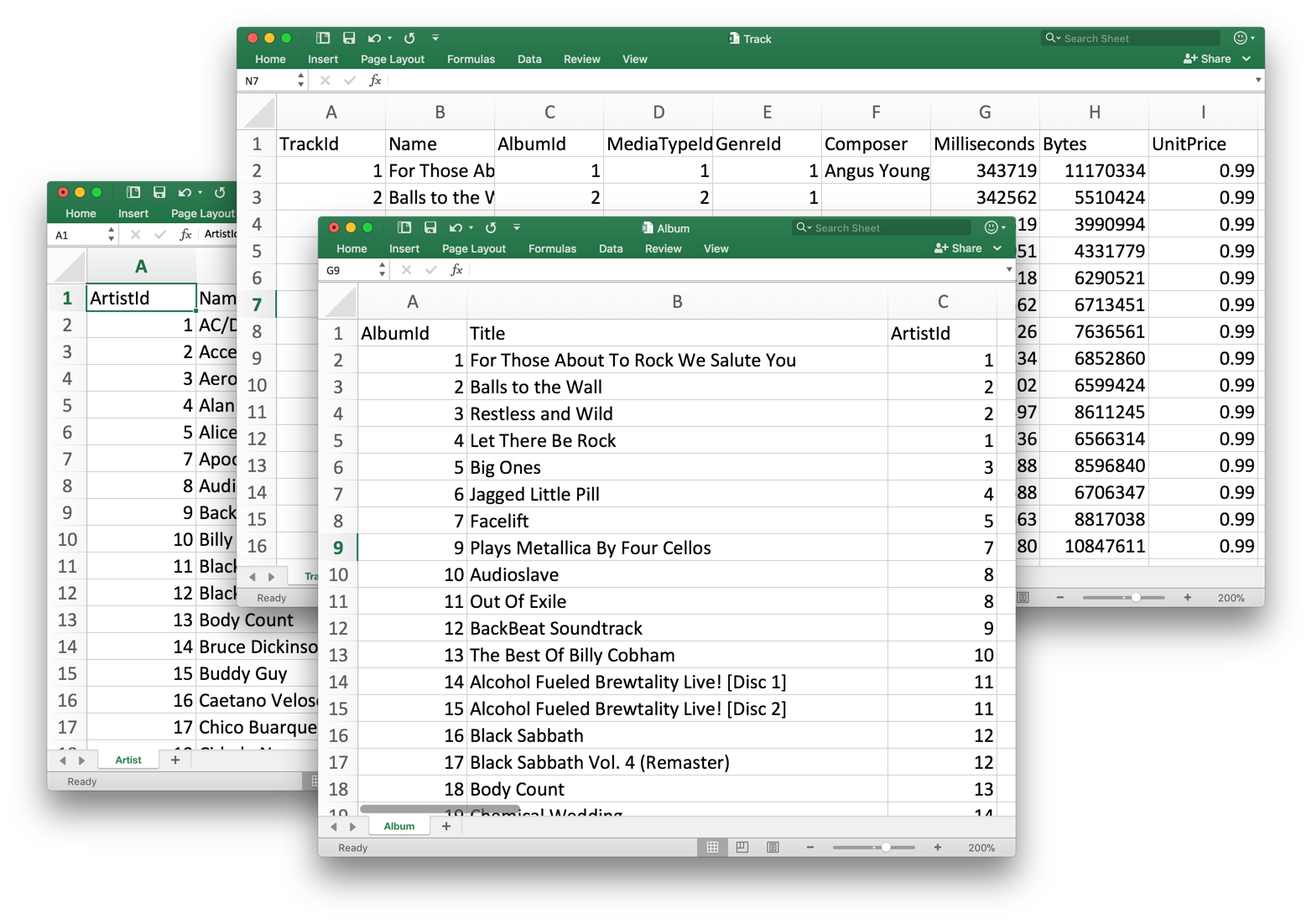

DATAGRIP EXPORT TO CSV CODE

Tables are created either through an import process using a Reusable Code Block, or created via a Jupyter notebook. Depending on your use case you might need to add more RAM to support more complex joins. SparkSQL scales horizontally so if the performance is not satisfactory add more workers from SparkSQL's Configuration Tab. Execute some queries on the connection:.If not, check your firewall settings at step 2. In DataGrip, click the "+" sign and add a Data Source by selecting the newly added Hive 1.2.1. In the same dialogue, at the Firewall tab make sure your IP is white listed from your current location. Click on the SparkSQL's Edit button and copy the JDBC URL.On the Options select the Apache Spark option in both Dialect and Icon dropdowns. Click on the "+" sign from "Driver files" and add both jarsĬhange the class to ".HiveDriver".Click on the "+" sign and select "Driver":.For the purpose of this demonstration we're going to use Jetbrains's excelent DataGrip. The JDBC connectors should work with all JDBC compatible clients.

DATAGRIP EXPORT TO CSV DRIVERS

Configure your BI tool to use the JDBC drivers mkdir ~/jdbc-drivers #you can put these anywhere cd ~/jdbc-driversģ. It also has a Hadoop-core dependency that does not come with it. SparkSQL is compatible with Apache Hive's JDBC connector version 1.x.

Deploy the Spark SQL Applicationįrom the Lentiq's left-hand application panel click on the SparkSQL icon.Ĭlick Create Spark SQL. The query engine is SparkSQL which uses Spark's in-memory mechanisms and query planner to execute SQL queries on data. The data is stored in parquet format in the object storage, the schema is stored a metastore database that is linked to Lentiq's meta data management system. Lentiq is compatible with most JDBC/ODBC compatible tools and uses Apache Spark's query engine. Querying data using SQL is a basic but fundamental use of any data lake.

0 kommentar(er)

0 kommentar(er)